A few weeks ago, I went to Atlanta to tour a data center. HP invited me down to take a look at a specific customer of theirs. I live about 4 hours away, so I drove down the night before excited to see racks of hardware, blinking lights, and further degrade my hearing by being inside the noisy room that held all that hardware.

This was a fairly large data center. It was the QTS Atlanta facility and is one of the larger data centers in the world. 970,000 square feet total, and 323,000 square feet is actual raised floor space. It’s a big building. The usual sights abound. Large pipes to carry water in and out of the facility to cool everything.

Here are some pics of the big pipes:

There are also pipes for air instead 0f water. I am assuming that is for air.

Here is one on top of the giant water tank that holds 1 million gallons of water. That water actually comes from the roof, which is built to capture rainwater. It saves them a lot of money on their water bill. It is hard to tell from this photo, but the slits are wide enough for a cell phone to fall through.

They also have their own power substation.

Let me show you an interior shot to give you some indication of size. This is a hallway that runs along the side of the main data center floor. The hallway is 1000 feet long. The picture doesn’t do it justice.

Another interesting thing to point out is the raised floor in the data center sits 48 inches high. A bit deeper than the datacenters I am used to. Since data cabling is all top of rack, that leaves plenty of room for cold air to flow since only power is run sub-floor.

Now that stuff is interesting, but I don’t know too many of my peers who get all hot and bothered by power and cooling. Nothing against those who do, but I just take those things for granted. If you want a better tour, you can watch the following video:

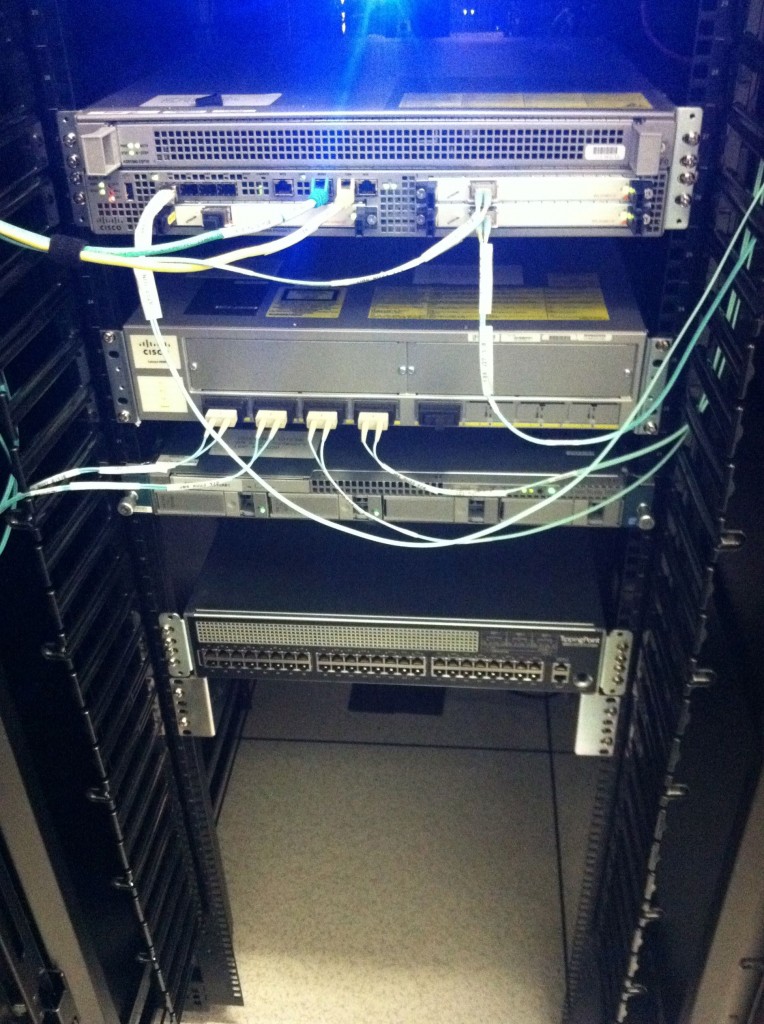

What I wanted to see, was the gear churning out the 1’s and 0’s. I knew it would involve a heavy amount of HP hardware, but I was surprised at how much Cisco gear was installed as well.

A Little Background

Diversified Agency Services(DAS) has over 100 sub-companies that each had their own IT infrastructure and IT staff. Imagine trying to manage and account for all of that. DAS undertook a project well over a year ago to consolidate the core data and applications of these 100 plus companies into a centralized environment. While there are obvious cost savings at play, it really comes down to ensuring IT can provide a reliable service to their customers. In this case, those customers are the 100 plus companies DAS is responsible for.

I was able to talk to several of the people responsible for making this consolidation happen while I was touring the Atlanta data center. The following people were at our disposal during the tour:

Jason Cohen – Global CIO for DAS

Jerry Kelly – North American CIO

BG Naran – Deputy General Manager and Data Center Architect for CDS

Mike Banic – VP Global Marketing, HP Networking

DAS was pretty happy with HP as their main vendor of choice for this project due to the high touch support they got. I saw a similar sentiment from Dreamworks when I was able to speak with one of their key IT people at Interop in Las Vegas back in May of this year. Obviously an organization the size of DAS is going to get high touch support from whatever vendor they do business with. At least, that vendor better give them high touch support if they want to keep doing business with them. I suspect(I forgot to ask this.) that HP was attractive due to the sheer amount of support capabilities and large product portfolio that they possess. They also chose the upper crust of technology that HP offers, with the exception of the servers. However, the servers they chose I would consider workhorses in the rackmount side of things. They aren’t bad, they just aren’t as hip as the blade servers. Of course, take that with a grain of salt. I am a network guy and for all the pictures I took, none of them, except one, had servers in them. 🙂

How Does It All Get Managed?

DAS has a data center in Phoenix that has similar gear and soon, they will be building one out in London. As a side note, they chose Phoenix and Atlanta based heavily on environmental factors as well as for latency purposes. Low incidence of earthquakes, floods, etc. Atlanta is prone to tornados, but the data center there can withstand the bulk of tornados.

Back to Dallas though. Their network operations facility is in Dallas,TX. Their staff have been pulled from a variety of the agencies that DAS oversees. They basically picked engineers and administrators from the diverse companies and pulled them all together under CDS, which is the new company that runs IT for DAS. Complicated isn’t it? 🙂

The way the North American CIO Jerry Kelly explained it to us, they had a bunch of generalists spread across the various companies. Now, they are able to create a bunch of highly focused engineers/architects and rely less on third party consultants to figure out solutions to problems due to the centralization that is underway with this project.

Why Did You Pick That Platform?

I asked BG Naran, who is kind of the head geek(I mean that in a GOOD way.), about the choices they made in terms of platforms. He told me that a lot of it was based on the pre-existing knowledge and comfort level their ops folks back in Dallas had.

What Did They Pick?

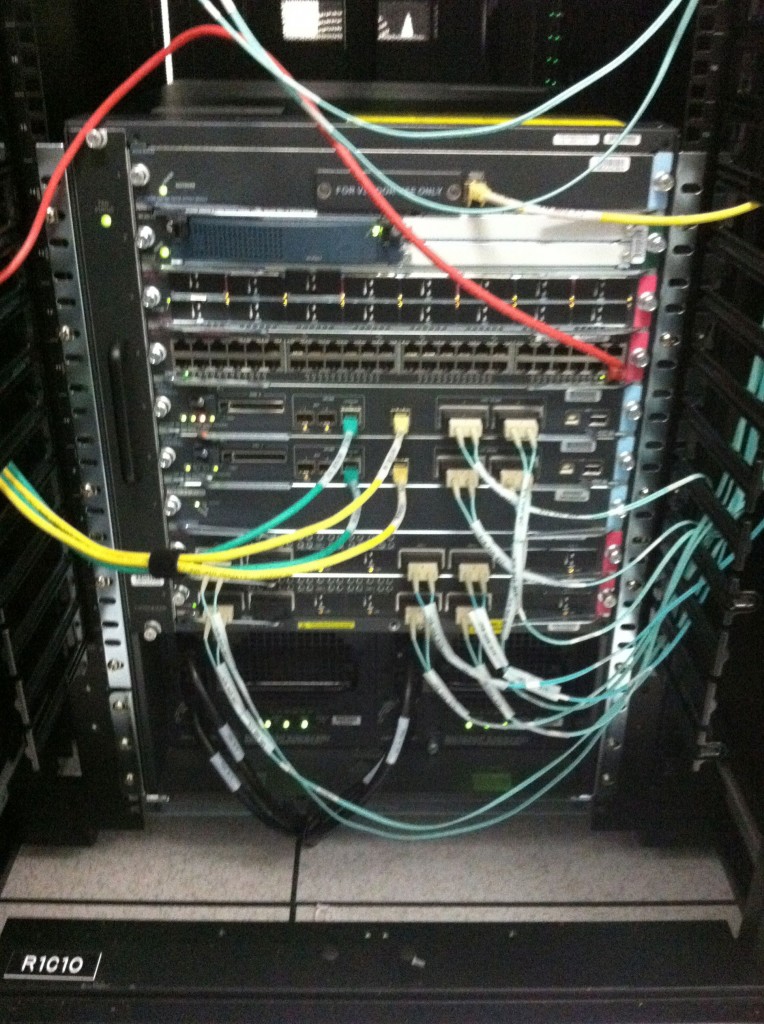

Their core switches are HP 12518’s. They utilize IRF between the 12518’s so it appears as a single logical switch. Up to 4 of these switches can be joined via IRF.

Check out this home made air baffle. The 12518 blows out a ton of air and this engineering feat here ensures it blows right up into the hot air intake on the ceiling. That’s a custom little part. It isn’t a manufactured product. Duct tape baby! It still has uses. 🙂

Top of rack switches to connect the servers are HP 5830’s and 5900’s. This gives a fair amount of 1/10/40Gig capabilities. The 5900’s in particular are for the ESXi clusters.

They are using the Nexus 1000v as the distributed virtual switch within VMware as evidenced by the Nexus 1010. They also have a fair amount of ASR 1K routers. Of course, my favorite switch in the entire Cisco portfolio is the 4900M, which they had several of.

Load balancing is handled within the data center by Cisco ACE. Load balancing across the multiple data centers is handled by Cisco ACE GSS.

Various Cisco switches/routers handle the monitoring network and what I assume was Internet/MPLS connectivity.

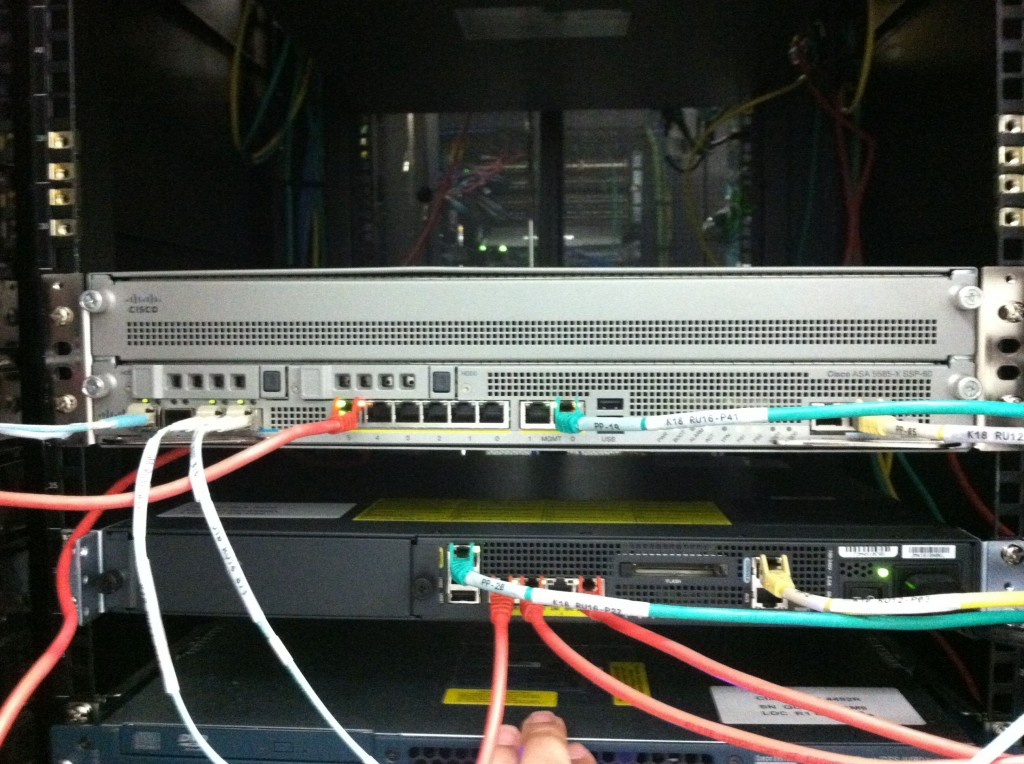

Big and small ASAs were in use.

For IPS, they stuck with HP and chose Tipping Point.

Closing Thoughts

All in all, it was an interesting tour of an impressive facility. What I found even more interesting was that the DAS folks are sharing this consolidation project through a website with blog posts as well as some pretty decent videos. Other than advertising for the company, there doesn’t seem to be much to gain for them. I suppose they are genuinely interested in sharing their experiences with the IT community at large. Take a look at the link here:

The geek in me cannot resist the urge to take pictures of equipment I find interesting. Even if I have seen the platform a million times, I still find it interesting. In case you might be wondering why certain things weren’t cabled up, it is because this data center is about to go live. It is still being spun up, hence the devices without cables.

All that cool stuff – e.g. the HP 12518s – and then they chose ACE! Wonder if they just regret that one a little?

Of course, you can’t seem to get a straight answer out of Cisco about what they’re doing with ACE, but I would be pretty uncomfortable if I was an ACE customer. Not so much because of the rumours, but because they can’t seem to get themselves organised enough to make an official statement.

Pingback: The Data Center Journal HP Helps Diversified Agency Services Transition to Cloud Data Center