If you hang around various IT departments long enough, you are bound to run into “shelfware“. That’s the term used to describe software that is purchased, but is either never used, or used for a brief period and then forgotten. Ask yourself this. Why would a company spend money on software and never use it? The answer can vary, but in my experience, it generally happens because the IT staff is too busy to give it the proper attention it needs.

If you hang around various IT departments long enough, you are bound to run into “shelfware“. That’s the term used to describe software that is purchased, but is either never used, or used for a brief period and then forgotten. Ask yourself this. Why would a company spend money on software and never use it? The answer can vary, but in my experience, it generally happens because the IT staff is too busy to give it the proper attention it needs.

Let’s face it. Your average corporate IT staff is overworked and understaffed. There is always more work than there are bodies to cover the workload. In my opinion, that’s the main reason so many IT people move between companies on a fairly regular basis. Burnout.

Then, there’s the problem of finding qualified people to perform the work. Maybe it is due to companies not wanting to invest in training their people or maybe demand is greater than the supply of talent out there.

One of the major pain points I have noticed in the past several years has been visibility within the network and the systems and applications that run on it. This is not as big a problem in larger environments where the IT staff and budgets are at a decent level. In the small and medium environments, visibility tends to be poor.

Why Is Visibility Needed?

Networks are infinitely more complex these days. I remember when I first got involved with IT in the mid-90’s. Everything was simplified when compared to today. An application was typically tied to a couple of servers and all the end users had some local piece of software installed that interfaced with these servers. Web services were in their infancy.

Fast forward to 2013. Web based applications dominate most of the environments I do work in. These applications are typically multi-tiered where a web server talks to application servers, and those application servers talk to a bunch of database servers. Load balancers are sending client requests to servers based on any number of factors. Complexity always seems to be going up and never down.

If you get into an environment with limited visibility into the network and applications, it isn’t a pretty sight when things stop working. Conference calls and meetings are spun up and everyone scurries about checking their various areas of responsibility to try and find the culprit.

APM To The Rescue!

Application Performance Management has become essential for so many networks out there in recent years. It isn’t enough to know if all your servers are up and running. The days of pinging a box and marking it as good are over. Often times, there are numerous things that have to be checked on each server, be it web, application, or database, just to determine whether or not it is healthy and can serve clients. The APM systems out there that give you insight into the problem cause can be equally as complex as the application you are trying to monitor.

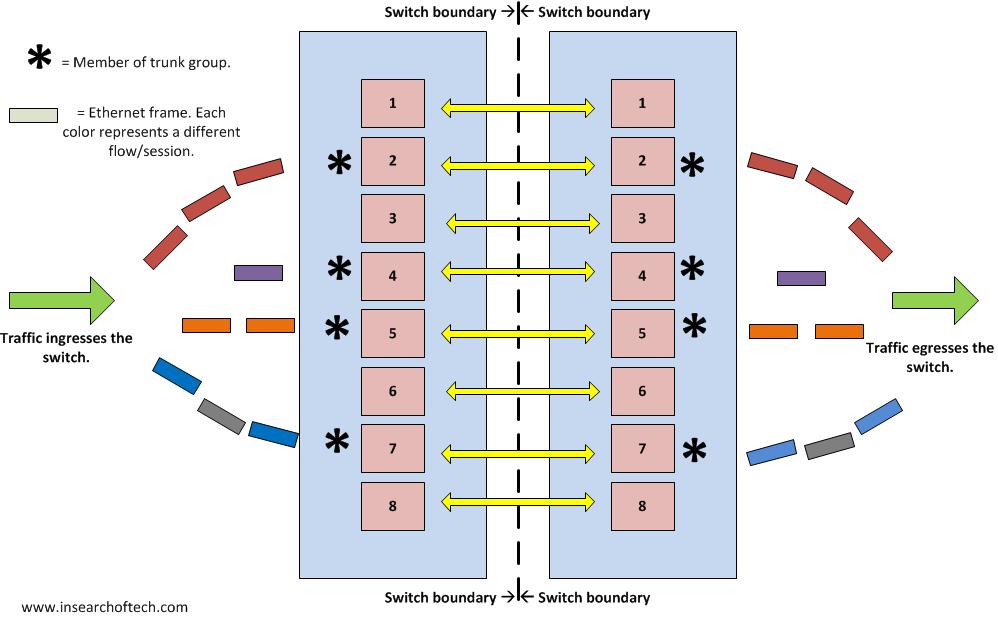

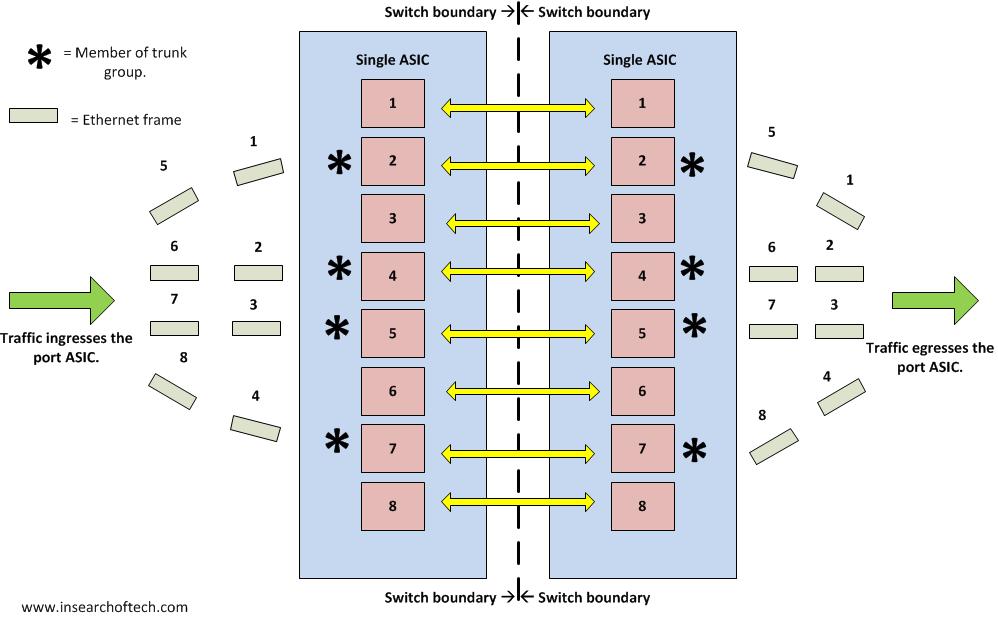

Let’s say that you run a simplified APM system like ExtraHop, which I wrote about here, that doesn’t require software agents on servers and uses packet captures to determine application health. You still have to have someone who can look at the data it presents and interpret that correctly to solve the problem.

Maybe your company has someone or a group who has the sole task of managing the various monitoring systems. I was in one of those environments several years ago, and that person was a very valuable resource. What if you don’t have that person or persons dedicated to watching monitoring systems? What then? That’s where software tends to end up as shelfware. It’s running. It’s watching various things, but generally only gets looked at when there is a problem. When there is a problem, hopefully you have someone on the IT staff that knows enough about your applications to make an intelligent guess as to what the problem is. If you don’t, there is an alternative.

Introducing Atlas Services

While at the Interop Las Vegas show in May of this year, I spent some time talking with ExtraHop about their Atlas service. I work for an ExtraHop reseller and wanted to learn more about this particular offering.

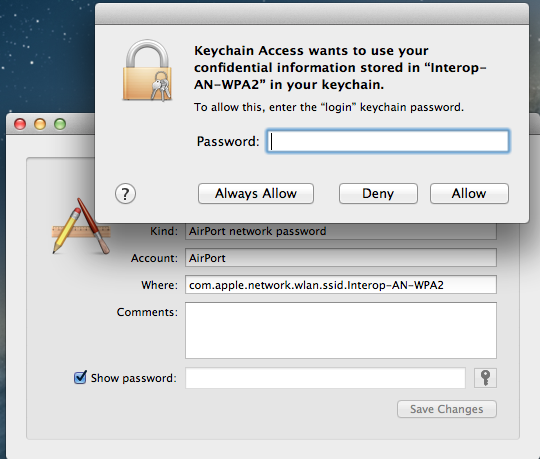

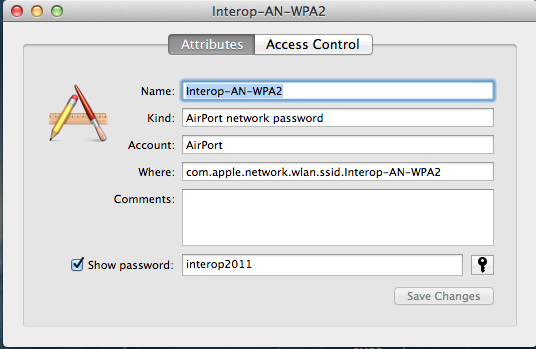

In an effort to take some of the difficulty out of APM, ExtraHop offers a managed service called Atlas. The concept is pretty simple. You drop in one or more ExtraHop appliances(physical or virtual), feed it the appropriate network data and they take care of the rest. In a non-Atlas deployment, you have the same appliances(all commodity Dell hardware for the physical boxes), but are left to your own to configure it and interpret the data.

With Atlas, engineers at ExtraHop review the data they capture from your network and build reports showing you where actual problems are. The longer they perform this service for customers, the more data they have to make even better recommendations as to how your network or systems should be configured. I liken this to security vendors that get data from their customer base and use it to create better signatures or methods to prevent exploits from bypassing their hardware and software. At some point, ExtraHop might be able to automate this process because they have seen a particular issue show up thousands or millions of times.

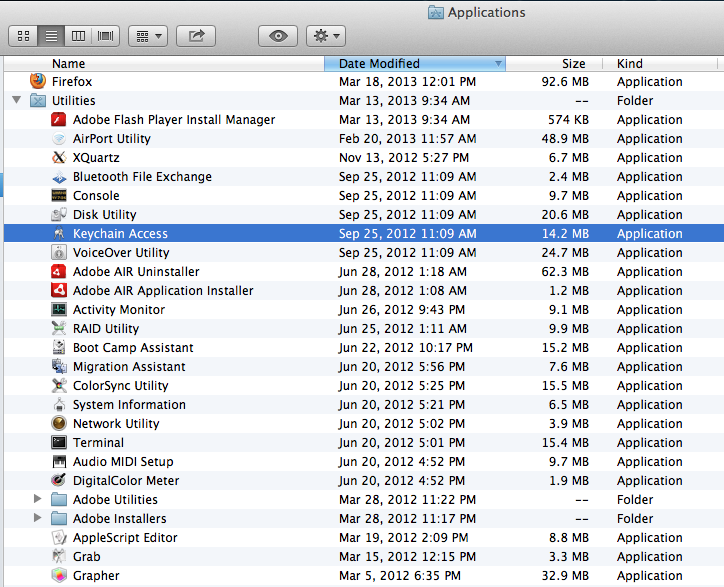

Here is a sample report from Atlas:

The link to the actual report is here.

What’s The Value?

There are a few things I can think of where a managed APM service like this helps.

First, you don’t necessarily have to employ an APM dedicated resource. They can use their expertise to provide you a level of knowledge and service as if you had someone who solely focused on APM on staff. This moves you closer to being proactive as opposed to reactive.

Second, it frees up your overworked IT staff to focus on other things. A lot of times when I am doing work for a client in a consultant capacity, it isn’t because I am more capable than the in house IT staff. It is because they have too much to do and just need to offload some work to a third party.

Closing Thoughts

APM is not easy. Implementation can be difficult and being able to get the maximum value out of the product tends to be a challenge without a dedicated resource tending to it. The Atlas service from ExtraHop is an attempt to take the headache out of APM. Their product is already easy to use without the Atlas Service:

Shelfware as a whole is probably not going to go away. However, with an offering like Atlas from ExtraHop, there is no need for your APM solution to not give you as much value as it can and end up collecting dust.

You can check out more about ExtraHop at www.extrahop.com.