The most recent Cisco Nexus implementation I was involved in had some challenges. Since you can’t really have an in depth discussion on Twitter due to the 140 character limit, I figured I would throw this post together and share in the fun that is Cisco Nexus switch deployments. Maybe it will help out with someone’s Cisco Nexus implementation. As always, comments are always welcome. Maybe you will see something I missed, or have a question regarding why something was done a certain way.

I have removed any identifiers to the actual customer this network belongs to and received permission from the customer to post this.

Initial Build

This was going to be a standard Nexus 7010, dual core install with a handful of FEX 2232/2248’s for TOR server connectivity. Ordinarily this is a pretty straightforward install. The Nexus 7000 series switch does not support dual-homing the 2200 series FEX like the Nexus 5000 series does. However, there is a way to connect a FEX to a pair of Nexus 7000’s and have it fail over if one of the 7000’s fails. You just have to wait about 90 seconds or so for the FEX to come online with the second Nexus 7000. This particular configuration is not supported by TAC(that I know of), so getting it to work is done without the assistance of Cisco.

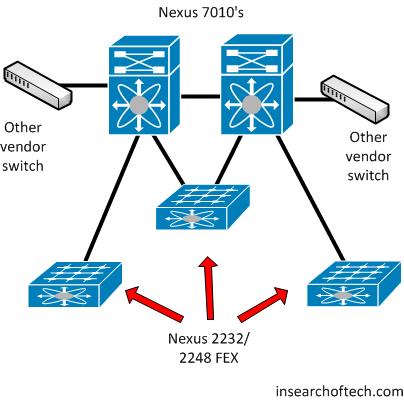

Here’s what the network would look like at this particular data center after the Nexus 7010’s were put in place:

Know what those non-Cisco switches connected into each Nexus 7010 mean from a Spanning Tree perspective? You guessed it. MST. That changes things a little bit, but not too much.

I should also point out that these Nexus 7010’s were purchased with a single N7K-M132XP/12 line card in each chassis. That means the only way into the box was via a 10Gbps connector. We had a 2Gbps circuit connecting this data center to another as the primary link, and a backup 1Gbps circuit connecting to that same remote data center. The 2Gbps circuit came via a 10Gbps handoff, so connecting it to the primary Nexus 7010 was not a problem. The other circuit came via a 1Gbps handoff, so that ruled out using the secondary Nexus 7010 as there were no 1Gbps interfaces. There was the option to buy a module for the Nexus 7010 that supported 1Gbps fiber connections, but since that particular card lists at $27,000(It appears you CAN buy anything on Amazon!), even with a decent discount, that’s a lot of money to spend just for a single 1Gbps connection. That also still doesn’t fix the inability to have redundant connections to a FEX with fast failover capabilities.

Design Change

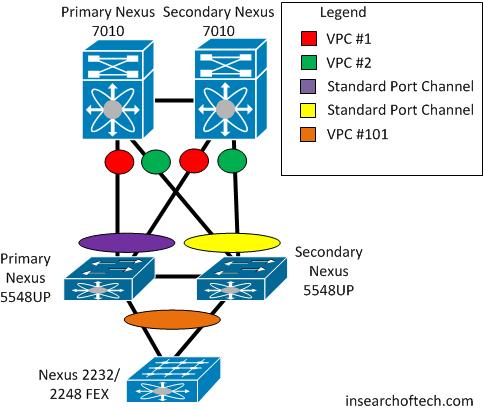

Due to issues around redundancy and flexibility of 1/10Gig connectivity, the design was changed to include Nexus 5548UP switches. A pair of 5548UP’s were purchased, and the design changed to the following:

*Note – Although the orange oval is listed as FEX 101 in the legend, it was actually several FEX’s numbered 101, 102, 103, etc. I realized the image looked confusing, but I am too lazy to go back and re-do the Visio drawing. Just know that it represents multiple FEX’s.

Implementation went fine. Everything appeared to be functioning normal. vPC was up on the 7k’s as well as the 5k’s. I tried to make the drawing above reflect what the configuration would look like. I didn’t want to drop lines and lines of config for 4 devices as it would really inflate the size of this post. Each Nexus 5548UP connected to both Nexus 7010’s via vPC. Each FEX connected to both Nexus 5548UP’s via vPC as well.

During testing right after the implementation, one problem did surface. An iSCSI storage array connected to one of the Nexus 2248’s was unable to communicate with another iSCSI storage array in a different data center. Basic ICMP(ie ping) communication was possible, but it was erratic in dropping some of the traffic. It looked like an asymmetrical flow issue where one path was getting black-holed or dumped somewhere along the way. There was also no direct ratio of pings that responded to pings that did not respond. We also tried to restart the replication process as ping is not a foolproof test. However, all ping tests were THROUGH the Nexus gear and not TO the Nexus gear. In the case of the Nexus 7000, there is a built in control plane policing policy that will start dropping excessive ICMP traffic sent TO itself. I’ve personally witnessed an issue where someone thought the Nexus 7000’s we just installed for them were messed up because they were throwing massive amounts of pings at the Nexus 7000’s, and they were not getting 100% of ping requests answered. Just because there is no response doesn’t mean there is a problem. Sometimes it is by design.

Due to the replication failure, we started to unplug some things. The FEX 2248 in question was unplugged from the second Nexus 5548UP it was connected to. That secondary Nexus 5548UP still had connectivity to the primary Nexus 5548UP and both Nexus 7010’s. The problem still happened.

Next, we reconnected the FEX 2248 to the secondary Nexus 5548UP. Then, we disconnected the secondary Nexus 7010 from the secondary Nexus 5548UP. The problem still occurred.

We ended up disconnecting all FEX 2232/2248’s and both Nexus 7010’s from the secondary Nexus 5548UP. The only thing the secondary Nexus 5548UP was connected to was the primary Nexus 5548UP. Everything stabilized after we isolated the secondary Nexus 5548UP.

Lab It Up!

The following week, I was able to recreate the same environment we experienced the failures in except for the iSCSI arrays. I had another pair of Nexus 7010’s and Nexus 5548UP’s for another data center this customer was going to upgrade in the near future. I also had several FEX’s to use for testing along with a 3750 switch that was going to serve as the connection point for the management interfaces on each Nexus 7010/5548UP. To simulate the remote data center, I had a Cisco 3560-X switch with a 10Gbps interface module to connect to the primary Nexus 7010.

I plugged a box into the Nexus 2248 FEX that would be the remote target. I plugged my laptop into the 3560-X using a completely separate VLAN than the link between the 3560-X and Nexus 7010. This ensured I was crossing multiple layer 3 boundaries between my system and the simulated remote target host. Testing showed no problems whatsoever. No packet loss. Nothing.

If At First You Don’t Succeed……

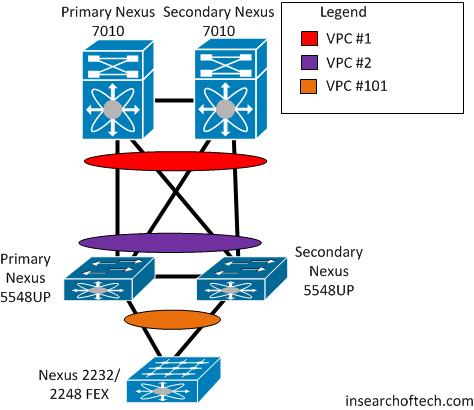

The next week, we went back to the data center and reconnected everything back up to the second Nexus 5548UP. The configuration of the Nexus 7010’s and Nexus 5548UP’s were modified to where the configuration reflected this:

The iSCSI arrays were now able to connect to each other across the WAN. However, another problem surfaced. We weren’t able to connect to the management side of the Nexus 5548UP’s consistently.

Remote Management Woes

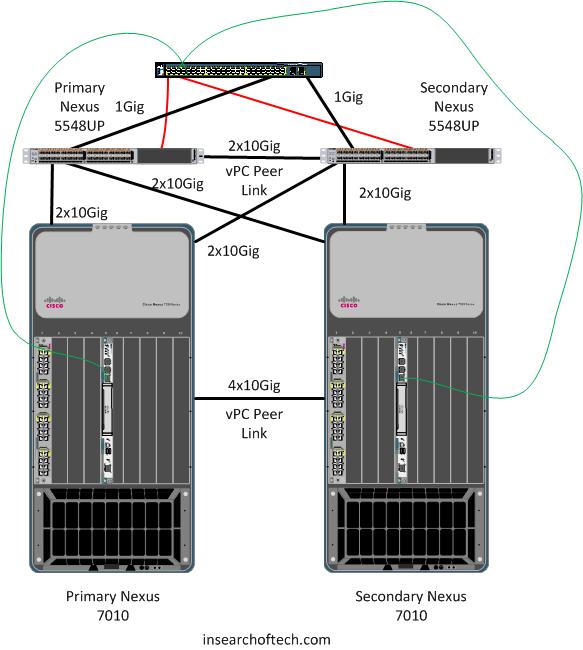

While administration of the Nexus 7010’s was in-band via IP addresses assigned to SVI’s, management access to the Nexus 5548UP’s is only possible via the management interface itself. The problem is that this particular client doesn’t have an out of band dedicated management network. These Nexus 7010 switches and Nexus 5548UP switches are going to be the only switches left in place at this data center once everything gets migrated off of the other vendor switches and onto the FEX 2248’s and FEX 2232. Due to that, the gateway for the Nexus 5548UP’s resides on the Nexus 7010’s. Normally, this wouldn’t be that big of a problem. Just plug the management interfaces into a free port on the Nexus 7010’s and be done with it. There’s just one small problem with that. These 7010’s only have 10Gig interfaces on them. Checking Amazon again, this module for 48x1Gbps copper connections is listed at $27,000. Factor in your discount, but even at 50% off, that’s still over 10k per Nexus 7010, and we would need 2 of them.

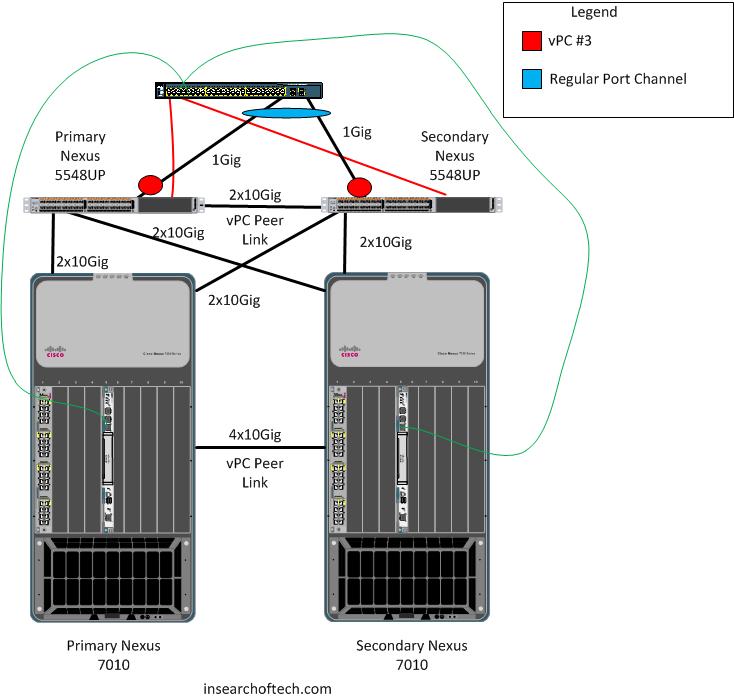

In order to get the management interfaces connected to the Nexus 7010’s for layer 3 reachability for remote management, they had to connect to a third party switch. We used a Cisco 3560G switch since it was on hand, and had copper and fiber capabilities. The management interfaces from the Nexus 5548UP’s connected into the copper Gig ports on the 3560G. The 3560G connected to the Nexus 5548UP’s via the 1Gig SFP slots. Then, the management traffic would ride the normal 10Gbps uplinks from the 5548UP’s to the 7010’s. Simple right? 😉 The black lines below represent regular links between the 7010’s and the 5548UP’s. They also represent the fiber connections from the 3560G to the 5548UP’s. The red lines are the management interface connections from the 5548UP’s to the 3560G switch. The green lines are the management interface connections from the 7010’s to the 3560G switch.

Back to the problem at hand. We could reach the Nexus 7010’s from across the WAN with no problems. We weren’t able to reach the Nexus 5548UP’s from across the WAN or from the local Nexus 7010’s. Additionally, remote access to the 3560 was pretty much non-existent. From the console port of the 3560, I could connect to both 5548UP’s. From the 5548UP’s and the 3560, Spanning-Tree looked fine. This was an MST environment, so spanning-tree was pretty simple to troubleshoot. We found that if we disconnected one of the uplinks from the 3560G SFP port to a 5548UP, the problem went away. Reconnect the second uplink and the problem reappeared. The inter-switch links connecting the 3560G with the 5548UP’s were setup as access ports. The only VLAN flowing across those links was the management VLAN that the 3560G and the 5548UP’s were using for remote device administration. Also, in the case of the 5548UP’s, the management interfaces were also where the vPC keepalive link was setup. For the 7010’s, I just needed 2 IP’s that could talk to each other through the 3560G switch. I followed Chris Marget’s suggestion and used 169.254.1.X addresses for the 7010 management IP’s. These IP’s were only used for the vPC keepalive link.

Testing went on for a bit and then the client made a suggestion that ultimately fixed it. He asked if we could setup both uplinks from the 3560 as a vPC link. Of course! The 5548UP’s were reconfigured for vPC on the interfaces connecting to the 3560. The 3560’s SFP interfaces were configured as a standard port-channel as well. The problem went away after that. The final management connectivity looked like the following:

A few questions regarding the Nexus line the perhaps someone can answer:

- Is it assumed that organizations deploying Cisco Nexus switches are going to have a dedicated management network?

- Will the Nexus 7000’s ever support dual connections to Nexus 2000 FEX’s? Were that feature available, no 5548UP’s would have been purchased for this particular implementation.

- Is there ever going to be a comprehensive Nexus 7000/5000/2000 design guide? Or, is it buried somewhere deep within the Cisco website and I just missed it?

- On a lighter note(no pun intended), why in the world are the Nexus 2000 FEX’s so heavy if they don’t even do local switching?

Good post! It’s always nice to read about implementation issues, hmm, I mean, not nice to read someone had issues but the real stories are something that you rarely see.

Regarding the 5548 SVIs, we have one environment where the 5548UPs are managed via the SVIs. There was some “management” command enabled, the documentation was really not totally clear about the implications however.

Your environment is kind of an example of the Real Network Plumbing: costs were avoided by leaving out the 10/100/1000 modules in N7ks, and therefore you needed to implement something else instead. I’m a fan of the separate management switches myself as you can use cheap older switches there nicely. In the latest implementation I even demanded dedicated wavelengths in the CWDM systems to interconnect the management switches independent of the production switches.

It would be nice to see the actual (relevant) configurations of the switches to be able to comment the issues you faced with the 3560 first, if you want to find out why the first way did not work. The vPC way is still the way I would have implemented it in the first place.

Markku,

Thanks for the comments. Every implementation is always different, but each one makes you stronger and able to do the next one even better. I find though, that you wish you could go back in time and re-do the ones you worked on several years ago!

The config on the 3560 was nothing but access ports all using the same VLAN for the 5548UP connections. The ports connecting to the management interface for each 5548UP had the same access VLAN configured as the ports that connected to a non-management interface on the 5548UP for the purposes of uplinking from the 3560. The 3560 also had an IP assigned to an SVI for that same management VLAN, but IP routing was not enabled for the 3560. It had a basic default gateway entry pointing to the gateway, which happened to be one of the Nexus 7010’s. We also verified that spanning-tree was working properly when both 5548UP’s were connected to the 3560. One port would be blocked and the other would be forwarding.

Ok, I don’t see any obvious reason for it not to work if there were no STP edge ports configured etc. I was just thinking about some bridging loop scenario.

You are right about going back in time. It is somehow encouraging that you really can see some of your previous implementations in different light, and maybe even avoid them in other cases if needed 😀

Hi Matt,

Thank you for sharing your experience with a Nexus implementation and sorry to read how rough it was. I’m wondering if using the the inband management capability of the N5Ks would have simplified your design and implementation significantly.

config

feature interface vlan

interface vlan xxx

ip address w.x.y.z/zz

management

no shut

end

copy run start

This would have avoided the whole 3560 being added and some of the woes you faced there. I’ve also heard of customers who connected the mgmt0 interface to one of the front panel ports with a GLC-T so that the mgmt0 was “sort of “inband.

As for your specific questions:

1 – Not at all. I’d say the majority of customers do not have this, but the option is there for those who do. You can manage both Nexus 7000 and Nexus 5000 via OOB or inband.

2 – We’re evaluating this as an option. Long story, we can discuss at Live if you like. I hear beer makes it better.

3 – CVDs are in the works. In the interim, there are tons of materials on PEC and Cisco Live virtual(365?).

4 – Wish I knew! 🙂

Just as a trivia note, the N5K has CoPP as well. On the Nexus 5000 series it cannot be modified but on the 5500 it can as of 5.1(3)N1(1)

-Ron Fuller

@ccie5851

Ron,

Thanks for the input. As you, Chris, and Markku pointed out, a better way would probably have been to use the mgmt inline via an SVI. I am not quite sure how in the world I missed that approach, but I did. Other than having to give up additional interfaces for the vPC keepalive link, are there any tradeoffs you can think of to running management in band as opposed to running it on the dedicated management interface?

In the case of the 7k’s, I know that vPC keepalive can be run in a separate VRF on an interface other than the vPC peer link. I’d lose a port or two on the existing single M132 module for the vPC keepalive link. These 7010’s only have the single module and supervisor, so there isn’t any way to spread out the various vPC interfaces across modules. I suppose with a single line card, it doesn’t really matter as the single line card failing will render the 7010 useless anyway. As for using the management interface on the 7k’s, I seem to recall reading in one of the 7k design guides that you couldn’t direct connect 2 management interfaces to each other with a crossover cable. I could be mistaken though.

You can crossconnect two N7k sup managements but only if you have single-sup installations. In dual sup configurations the mgmt port is disabled on the standby sup and you cannot reliably predict which sup is active at which point. That’s why you should not connect the mgmt ports face to face in such situations. But in your current scenario (single sup) crossover is ok.

The 5ks most certainly support inland svi.. Did you enable the ‘feature interface -vlan’??

Thanks for the comment. I missed the inband management piece on the 5k. Now that you all mention it, I can see it plain as day. 🙂

On the Nexus 2000 weight question, I have for years maintained that Cisco lines some chassis with lead, just so you can feel like you’re getting something for your money. 🙂

Pingback: Internets of Interest for 9th May 2012 — My EtherealMind