I remember the first time I saw a storage area network(SAN). It was 2001 and my employer had just purchased 3 Dell(EMC) SANs. I worked for the US military at the time, so the three SANs were for 3 networks of varying security levels. We had rack after rack of 36GB hard drives. If you turned out the lights in the data center, you could see hundreds of lights blinking all at once. An impressive sight for that particular point in time.

I remember the first time I saw a storage area network(SAN). It was 2001 and my employer had just purchased 3 Dell(EMC) SANs. I worked for the US military at the time, so the three SANs were for 3 networks of varying security levels. We had rack after rack of 36GB hard drives. If you turned out the lights in the data center, you could see hundreds of lights blinking all at once. An impressive sight for that particular point in time.

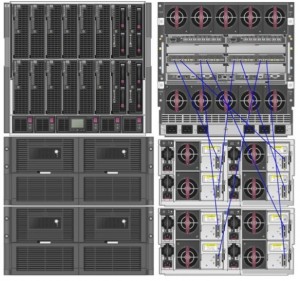

Ten years later, you can fit those 20 or so racks into 1 or 2 racks. It isn’t just the hard drive numbers that are decreased due to advances in individual drive capacity. The servers interacting with all of that data would be virtualized today. The 2 dozen or so physical servers I had for those SANs would be reduced down to 1 blade enclosure today.

Yet, when we look at what we have in data centers today, it really hasn’t changed that much. Your typical data hungry enterprise still has racks of storage. The drives themselves have just grown exponentially larger from the old 36GB 10,000 RPM drives I used in my Dell SANs to sizes in excess of 1TB. The bulk of the servers are virtualized and running on blades now, except instead of just having a few dozen servers, I now have hundreds or thousands. This requires a large number of blade enclosures. Even if your blade servers can support half a terabyte of memory, you still need quite a few of them. For the network, we’ve traded out most of the copper ports for fiber ones, except the number of fiber ports required has grown tremendously.

We’re doing the same things we’ve been doing for the most part. We’re just able to do it on a larger scale. Am I wrong? Isn’t it just capacity upgrades for the most part? Sure, your VMware ESXi hypervisor is really tiny now. With some of the blade servers out there holding gigantic amounts of RAM, we’re packing dozens of VM’s on a single blade. Our processors have multiple cores and can handle much larger workloads today than they could even a couple of years ago. Still, I submit that we’re doing the same thing I was doing 10 years ago with my shiny Dell SANs in my data center. I still have a storage silo, a server/compute silo, and a network silo. You can get nit-picky and throw in a virtualization silo if you want.

The thing is, I don’t think keeping these silos are a problem. It works doesn’t it? Maybe in this modern world of “cloud computing” and the quest for the holy grail of IT services you disagree. If someone picks application, compute, storage, virtualization, and network services on demand with the same ease that they order a pizza, does that mean we’ve achieved IT nirvana, or does it just mean that we’ve written better software and call it orchestration?

Chew on that for a minute, and yes, I know I am being simplistic and over-generalizing.

Convergence Please!

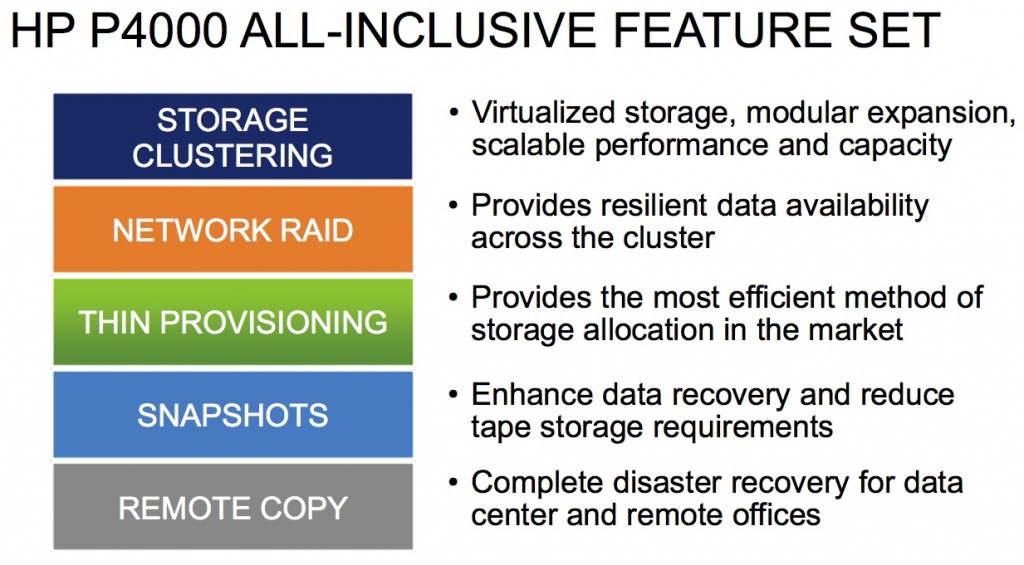

You want to talk convergence? Want to talk about destroying silos? Fine. Let’s talk about that. In fact, I can think of no better example of that than the “HP P4800 G2 SAN Solution for BladeSystem.” That’s a mouthful of a title, so I will just call it the “data center blender”. All your silos dropped into one package. Is it for every possible data center buildout or scenario? Absolutely not. If it were, HP wouldn’t sell storage systems with much larger capacities. However, if the bulk of customers out there have storage requirements below the cap on this “data center blender”, then I think we have a platform that can exist in many, many datacenters. The cap, by the way, is 672TB. I know it would actually be far less usable space once you account for RAID levels, hot spares, mirrors of disk shelves, etc, but it would still be a tremendous amount of storage capacity. I am willing to bet most customers out there have data requirements far less than 500TB. Maybe I am wrong. After all, I am not a storage pro, as you have no doubt figured out this far into the post. 🙂

How does it work?

Take a C7000 series blade enclosure, put storage controllers in 2 slots and use the rest for either more storage controllers, or server blades. Add modular disk shelves below the C7000. Cable it all up using the Virtual Connect modules, and bada bing, bada boom. You now have storage, compute, virtualization, and network all in one. This is one of those “Why didn’t I think of that?” products. Of course, you would have to produce all the components, so that narrows the field down to less than 5 companies. HP happens to be one of them, and the one that sells the most hardware out of all of them.

You can start out relatively small on this particular system. Do you only need 2 or 3 blade servers in the C7000? Fine. Buy more as you need them. Just need a few dozen terabytes of storage? Fine. Buy more as you need it. Start small and grow to a pretty large size. Already have C7000 enclosures in your data center? Congratulations, you’re already half way there.

Do I need to go on? No. You just need to check it out yourself. Here’s a link, and let me throw in some eye candy below.

So there you have it. That’s one example of a converged storage product from HP. It will be a great fit for quite a few companies out there. They took a very popular product in the C7000 series blade enclosure and gave it additional value by pairing it with a storage system that eliminates the need for a dedicated storage switch.

Closing Thoughts

Convergence is a hot issue these days. If it helps to save money as well as speed up deployment of IT services, lots of people are interested in that. We have become a “must have it now” culture in business as well as our personal lives. Customers don’t care how long it takes you to provision services. If you are selling it, they want it. Every single time a service like Netflix or Hulu has performance problems, I am not sitting around giving them the benefit of the doubt and theorizing whether they are having capacity issues. When it comes to services I am paying for, I don’t care what your problems are. I want it to work the second I need it or give me my money back and I will go find someone who can deliver.

A word of caution as we start tearing down these silos though. Storage, compute, virtualization, and network silos can get very complex long before you start mashing one or more of these things together. If you do not have good people handling these converged products, you are asking for trouble. The compute and virtualization silos are probably close enough to where a single person can understand both. The others, well, not so much. That’s not to say that an individual cannot have a firm grasp in multiple areas. They can. It just takes work. Hard work. That’s been my biggest concern with some of these convergence plays. They dumb down some very complex systems in the interest of “getting it done”. That’s all well and good when it works fine. What about when it breaks? Can you fix what you don’t understand?

Fine Print

This post is the first of three that I will be doing as part of Thomas Jones’ blogger reality show contest. The winner will be announced at VMworld at the end of August. Please vote at the top of this post as to whether or not you liked this post. I am up against several other bloggers, and although going to VMworld is enough of a reward, I can’t pass up an opportunity to compete with other techies! Leave me a comment as well if you liked this post, or even if you hated it. I’m a big boy. I promise you won’t hurt my feelings. 😉

Matt,

Really good post!

I like how at the end you brought in the point of how complex these solutions can be to work on! People just want it to work and sometimes disregard that complexity.

Great post Matthew! I agree that we are still doing the same things we were doing 10 years ago, just on a larger scale (since prices decreased) and in a more condensed way… But I’m seeing the limitations of the current architecture in my data center. The controllers aren’t keeping up. The scale-out method seems to make sense, though, to me. I know the devil is in the details, always – ie, how well does it all really work, but I think the new architecture ideas are answers to real problems.

Convergence is one thing, but just as important is modularity. Modularity will help cut complexity, but also lower capex in data center build out. Not to mention critical power, PUE, and LEED certification.

Great post – I am glad you are writing about HP…I would like to see more on the storage side though. I can roll with the HP server solution, but the idea of moving off NetApp causes my eyes to bleed…. 🙂

In reality, isn’t our new world of virtual servers, virtual switches and what not, just the old school data center with mainframes. I mean think about it, all our “servers” are not just one offs running 1 maybe 2 services on them all on one big box.

I would say we have gone full circle and are back to the mainframe days — minus the HUGE amounts of space needed for those dinosaurs 🙂