****Note – I am NOT in any way shape or form a VMware expert. I can’t guarantee you that I will be 100% correct in my terminology or representation of VMware, VMotion, VSphere, etc. I apologize in advance. I am just a network guy trying to understand how the Nexus 1000V ties into the VMware ecosystem. I also understand that companies other than VMware are doing virtualization. Please feel free to correct my inaccuracies via the comments.

Paradigm shifts are coming. Some of them are already here. About 5 or 6 years ago I was first introduced to server virtualization in the form of VMware ESX server. For you old mainframe people, you probably weren’t as impressed as I was when I learned about this particular technology.

When it came to VMware, I wasn’t doing anything fancy. I was just using it to host a few Windows servers. When these boxes were physical, they were only using a fraction of their CPU, memory, and disk space. In most cases, they were specific applications that vendors would only support if they were on their own server. From a networking standpoint, there was absolutely nothing fancy that I was doing. All of the traffic from the virtual machines came out of a shared 1 gig port. For me, VMware was a fantastic product in that it allowed me to reduce power, rack space, and cooling requirements.

I realize that some people will take issue with my use of the term “server virtualization”. To some, software and hardware virtualization are different animals. For the purposes of non-VMware people like myself, the fact that I used VMware to reduce the physical server sprawl means that I refer to it as “server virtualization”.

Fast forward to today. It is getting harder and harder to find a company that isn’t doing some sort of server virtualization. It isn’t just about reducing physical server footprint and maximizing CPU and memory resources. These days, you can achieve phenomenal uptime rates due to things like VMotion. For those who are unfamiliar with VMotion, it is a service within VMware that can move a virtual machine from one physical host(ie ESX/ESXi server) to another. This can happen as a result of hardware failure on the physical host itself, additional CPU/memory resource requirements, or other reasons that the VMware administrator deems important.

Today, from a networking standpoint, there are 3 options when it comes to networking inside the VMware vSphere 4 ecosystem:

vNetwork Standard Switch – 1 or more of these standard switches reside on a single ESX host. This would be the vSwitch in older versions of ESX. This is basically a no frills switch. Think of this as managing switches without the use of VTP. You have to touch a lot of these switches if certain VLAN’s reside on multiple ESX hosts.

vNetwork Distributed Switch – 1 or more of these will reside in a “Datacenter”. By “Datacenter”, I am not referring to a physical location. Rather, in VMware lingo, it is a logical grouping of ESX clusters(comprised of ESX hosts). This is the equivalent of running VTP across a network of Cisco switches. You can make changes and have them show up on each ESX host that is part of the “Datacenter”. This particular switch type has several advantages over the standard switch in terms of feature availability. It also allows you to move virtual hosts between multiple servers via vMotion and have the policies associated with that host

Cisco Nexus 1000V – Similar to the distributed switch, except it was built on NX-OS and you can manage it almost like you would any physical Cisco switch. It also has a few more features that the regular VMware distributed switch does not.

That’s the basic overview as I understand it. What I had been struggling with was the actual architecture behind it. How does it work? I can look at a physical switch like the 3750 or 6500 and get a fairly decent understanding of it. Not the level I would like to have, but I understand that vendors like Cisco don’t want to give away their “secret sauce” to everyone that comes along and asks for it.

As luck would have it, my company has purchased several instances of the Nexus 1000V and last week, I was able to spend a day with a Cisco corporate resource and one of the server/storage engineers my company employs. I didn’t realize how deficient I was in the world of VMware until I got into a room with these 2 guys and we started talking through how we would design and implement the Nexus 1000V. I kept asking them to explain things over and over. In the end, a fair amount of pictures on the white board caused the light bulb in my head to go active. I still have much reading to do, but for now I understand it a LOT more than I did. Now, let’s see if I can have it make sense to you. 🙂

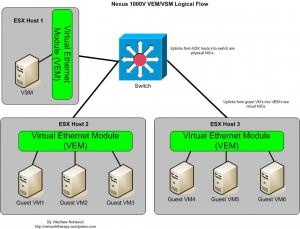

The Nexus 1000V is basically comprised of 2 different parts. The VEM and the VSM. If we were to assign these 2 things to actual hardware pieces, the VEM(Virtual Ethernet Module) would be the equivalent of a line card in a switch like the Nexus 7000 or a Catalyst 6500. In essence, this is the data plane. The second piece is the VSM(Virtual Supervisor Module). This is the same as the supervisor module in the Nexus 7000 or Catalyst 6500. As you probably already guessed, this is the control plane piece.

Here’s where it gets a bit crazy. The VSM can support up to 64 VEM’s per 1000V. You can also have a second VSM that operates in standby mode until the active fails. In theory, you have a virtual chassis with 66 slots. In the Nexus 1000V CLI, you can actually type a “show module” and they will all show up. Each ESX host will show up as its own module. Will you ever have 64 VEM’s in a single VSM? Maybe. However, there are limitations around the Nexus 1000V that make that unlikely.

The VEM lives on each ESX server, but where does the VSM reside? It resides in its own guest VM. You actually create a separate virtual machine for the VSM when installing the Nexus 1000V. That guest VM resides on one of the ESX servers within the “datacenter” that the Nexus 1000V controls. You access that guest VM just like you would a physical switch in your network by using the CLI. Once the VSM is installed, the network resource can go in via SSH or Telnet and configure away.

That’s the basic components of the Nexus 1000V. There are other things that need to be mentioned such as how communication happens from the guest VM perspective to the rest of the network and vice versa. Additionally, we need to discuss the benefits of using the Nexus 1000V over the standard VMware distributed switch. There’s a lot more than just the management aspect of it. I will cover that in part 2. Additionally, I plan on doing a write up on the Nexus 1010 appliance. This allows you to REALLY move the control plane piece out of the VMware environment and put it on a box with a Cisco logo on it.

Good work on the VMware content. I think you got it. Looking forward to the next post, Matt.

Pingback: Tweets that mention Wrapping My Head Around The Nexus1000v – Part 1 « The Network Therapy Blog -- Topsy.com

Pingback: Back From the Pile: Interesting Links, February 18, 2011 – Stephen Foskett, Pack Rat